In my previous article, I gave a quick introduction to neural networks and how they can become really smart. Now, think of the brains of different organisms. Is it mere coincidence that humans – the smartest of all species – have really large brains? Not really. Humans are smart because they have large brains, because their brains have millions of connections that endow them with the capacity to learn many different things. And just like human brains, the capacity of neural networks to become smart and learn something depends on how big and complex they are. As a general rule, bigger networks can learn more. But neural networks are trained on computers, and so bigger networks will be a big burden for a computer. Like if you tried to get your computer to do a million multiplications, it could do that in less than a second, but if you tell it to do a billion computations – as might be demanded by a big neural network – it would take more time. And since nobody likes waiting around, getting the right complexity for a neural network is key. This is essentially the problem of model search – the attempt to find the right (or at least, a good enough) neural network model for a given problem. There are two key aspects to model search – architecture search and hyperparameter search.

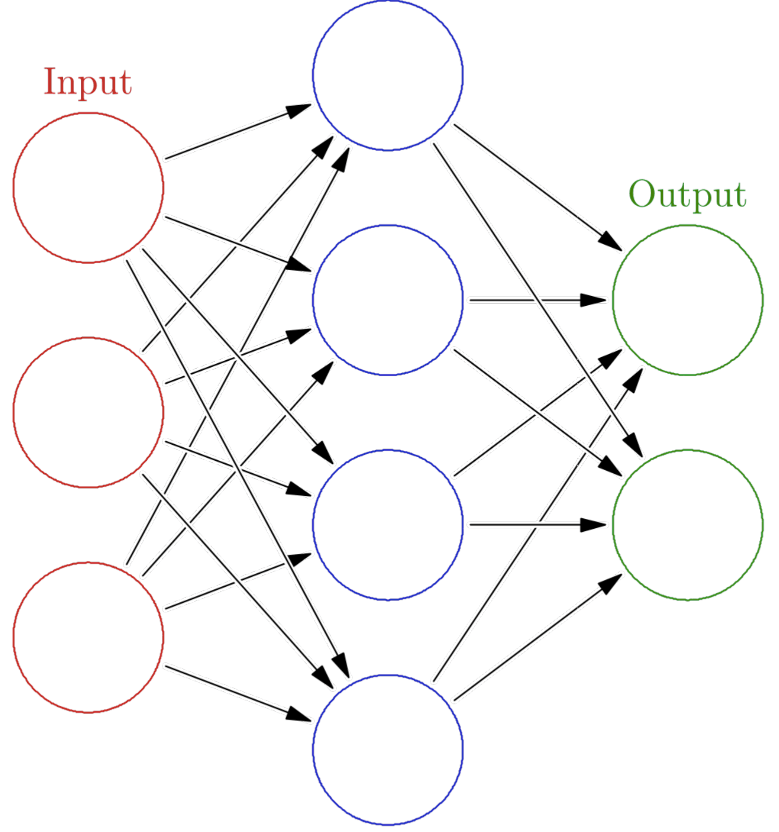

Architecture search is concerned with the structure of the network. For example, the network I showed in my previous article (given below) has 3 layers – red, blue, and green. It has a total of 20 edges – the black lines with arrows. This is a particularly simple network. Bigger networks are deeper – they can have more than a hundred layers. Moreover, these layers can be of different types. Details about different types of layers is beyond the scope of this article, but I would like to mention that a network which sees an image and tries to recognize what it is will have different types of layers as compared to a network which analyzes some text and tries to predict what the next word will be. Figuring out which types of layers to have in a network, how many of each are required, and which ones connect to which ones – these constitute the problem of architecture search.

Hyperparameters, on the other hand, are the things which affect the way a network learns. For example, how fast will a network learn something new? You don’t want your kid to learn the whole alphabet in one day so that he forgets half the letters the next day. But you also don’t want him to take a year to learn the alphabet. Thus, choosing the learning rate is important. There are other hyperparameters, but perhaps none so important as the learning rate, towards which various research efforts have been dedicated. Knowing how to choose hyperparameters properly is an important aspect of model search.

So there you have it. Model search is the search for which neural network models to deploy for a given task. It is what my research currently deals with, so wish me luck!

Published on August 7th, 2019Last updated on April 1st, 2021